Apple calls the Vision Pro a spatial computer. This headset draws its user into an immersive mixed-reality environment that merges the real world with the digital world through a combination of augmented reality and virtual reality technologies. The slew of sensors in the Apple Vision Pro might seem an overkill but it is one of its defining features that set it apart from other mixed-reality and virtual reality headsets.

Understanding the Sensors in the Apple Vision Pro: What Do They Do and How Do They Work

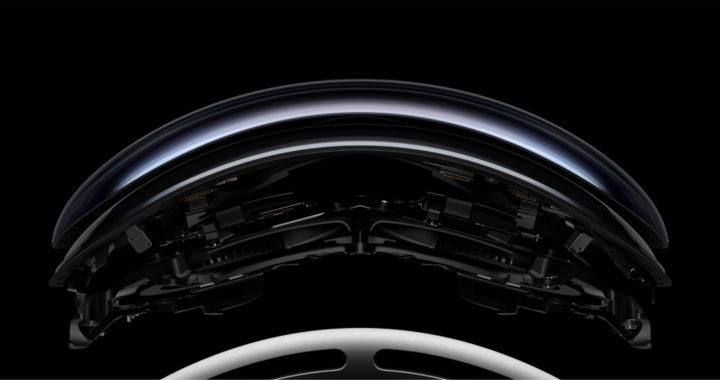

Inside the Apple Vision Pro are several sensors. These specifically include 12 cameras, 7 specific sensors, and 6 microphones. These sensors convert or digitize whatever they are sensing into digital input data. The Apple R1 chip is specifically designed to handle real-time sensor input processing to produce the output data that shape the mixed-reality environment. The following are the specific sensors in the Apple Vision Pro:

1. Eight Cameras in the Exterior

There are 2 high-resolution main cameras, another set of 4 high-resolution downward cameras, and 2 high-resolution side cameras for a total of 8 cameras on the exterior. These are used for capturing images and videos in the real world, environment mapping, and assisting in head and hand tracking. The system transmits over 1 billion pixels per second to the two lenses made from OLED display technology inside the headset.

Note that the visual input data are specifically processed and rendered in real-time to display them in 4K resolution or integrated into the rendered mixed-reality environment. Furthermore, because the entire Apple Vision Pro can also be considered as a three-dimensional camera, the exterior cameras also collectively constitute its 3D camera system.

Take note that Apple has specifically marketed its mixed-reality headset as its first 3D camera. The entire camera system found on the exterior of the device enables a user to capture spatial images and record spatial videos in three dimensions. The images and videos can be viewed or watched in a panoramic orientation that wraps around the user to make him or her feel as if he or she was standing right where they were taken.

2. LiDAR Scanner

Light detection and ranging or LiDAR is an active remote sensing method and digital imaging technique that involves firing pulses of near-infrared, visible light, or ultraviolet to measure the distance between the target points and the sensor-equipped source. It works by measuring how long it takes for different emitted pulses to return or reflect to the sensor.

Apple first integrated a LiDAR scanner in the 2020 iPad Pro or the third generation of the iPad Pro. It was later included in the iPhone 12 Pro and iPhone 12 Pro Max. The primary function of this sensor is to create a three-dimensional map of the surroundings within its field of vision. This map can be used for a variety of purposes. These include supporting augmented reality applications, 3D scanning for object tracking, and improved low-light autofocus.

It is also one of the sensors found in the Apple Vision Pro. The LiDAR scanner is specifically placed on the middle-bottom exterior surface of the headset. It blasts pulses of light to create a 3D map of the environment to enable depth perception, occlusion, object recognition, and scene understanding. These are important in mixed-reality rendering.

3. Four IR Cameras and Two LED Illuminators

The interior of the head includes 4 infrared cameras and 2 light-emitting diode illuminators. These components are placed at the border of the pair of OLED screens. The main purpose of this interior camera and sensor system is to scan and track the eyes of the user. They also help with the eye calibration feature of the headset for more precise tracking and with the vision correction feature for users that require prescription glasses.

Eye tracking is a defining feature of the Vision Pro because it enables the user interface to be controlled using eye movements. The interior cameras and sensors specifically track eye movements and pupil dilation in real-time to enable eye detection and gaze detection. These cameras and sensors also work in synchrony with other sensors that track hand gestures for seamless processing and coordination of eye-and-hand movements.

The interior camera and sensor system also enables the EyeSight feature. This enables the exterior of the headset to display the eyes of its user. This can let those nearby know when a user is fully immersed in the user interface or mixed-reality environment. The same system powers the Optic ID feature. This is an ocular biometric feature based on iris scanning for unlocking the device, auto-filling passwords, and making payments.

4. Two Apple TrueDepth Cameras

Apple introduced the TrueDepth camera alongside the introduction of the iPhone X in 2017. It is a sensor that blasts thousands of invisible dots via an infrared emitter to create a depth map of the face and capture its infrared image. It enables the Face ID technology for facial biometrics and facial recognition and is used to support motion tracking of the face.

Nevertheless, in the mixed-reality headset of Apple, there are two TrueDepth cameras in the interior near the nose bridge. The system is used to scan the head and ear geometry of the user for creating a digital representation for calibration purposes. It also measures the distance between the eyes and the device to adjust the focus and alignment of the lenses accordingly. This sensor tailor-fits the headset to the facial features of the user.

It is also important to note that the TrueDepth camera works together with the 4 IR cameras and 2 LED illuminators to enable features like eye movement and pupil dilation for eye control and gaze detection, Optical ID for biometrics and vision correction, and EyeSight for displaying the eyes on the outside lenses or display screens.

5. Six Microphones or Audio Input

The Apple Vision Pro also has 6 microphones. Each side of the mixed-reality headset has a single set of three microphones. These hardware components specifically serve as audio input sensors for enabling voice control, capturing spatial audio, recording ambient sound, and capturing sound for audio-based communications and recordings.

Remember that the device can also be controlled via voice commands. The microphones can be used to control the Siri. This voice assistant from Apple can perform various tasks and answer questions using natural language processing or NLP. The commands can be used for launching apps, adjusting settings, making calls, and playing video games. The same NLP capabilities support dictations to enable users to input text or support voice-to-text conversion.

The same microphones are also used for capturing real-time ambient sounds or for recording sounds during video recording. The entire audio input hardware system can create sound for Spatial Audio applications. The system also has noise cancellation and beamforming capabilities for enhancing the quality and clarity of captured or recorded audio.

6. Accelerometer and Gyroscope

This device is also equipped with an accelerometer. An accelerometer is a sensor that measures the static force of gravity or the dynamic forces caused by a movement. It has been integrated into smartphones to detect its orientation for features like auto-rotate and shaking for enabling turn-by-turn navigation. The Apple Vision Pro uses an accelerometer to track the movement of the head for better orientation in the mixed-reality environment.

A gyroscope is another sensor that measures or maintains orientation and angular velocity. It uses a spinning wheel or disc in which the axis of rotation is free to assume any orientation by itself. The mixed-reality headset from Apple uses this sensor to aid in the tracking of head movements and tilt. This helps in further integrating and orienting the user and his or her movements in the mixed-reality environment for a better immersive experience.

The device essentially uses the built-in accelerometer and gyroscope sensors to detect left, right, forward, backward, upward, and downward movements. The accelerometer specifically detects, tracks, and captures these movements and the gyroscope helps the headset understand whether the use is moving and tilting his or her head. These sensors work alongside the other sensors in the Apple Vision Pro to track the movements and orientation of its user.