Imagine if your thoughts could be translated into full sentences using a recording of your brain activity. While this may sound like something straight out of a science fiction film, this futuristic possibility is now one step closer to becoming reality.

New Frontiers in Neural Decoding: How Noninvasive Artificial Intelligence Translates Brain Activity Into Descriptive Natural Language

The emerging field of mind captioning shows how advanced imaging and AI can work together to reveal mental content. The findings demonstrate that thoughts, memories, and images produce rich semantic patterns in the brain, opening new possibilities for assistive technologies and deeper insight into human cognition.

How Mind Captioning Became Possible

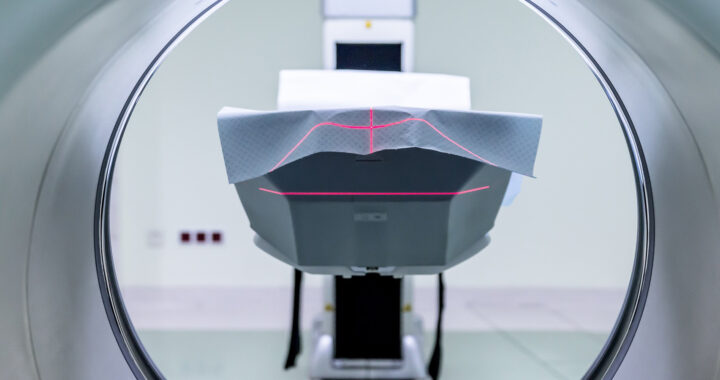

A study published on 5 November 2025 by Tomoyasu Horikawa of NTT Communication Science Laboratories demonstrated how brain activity recorded through functional magnetic resonance imaging can be translated into accurate descriptive sentences. The system uses advanced artificial intelligence models to turn neural patterns into meaning-rich language.

Researchers have long wondered whether thoughts, memories, and mental scenes leave patterns detailed enough for computers to interpret. Horikawa and colleagues built on earlier experiments that decoded images and dream content/. They pushed this further by attempting to capture the deeper meaning behind complex visual scenes rather than isolated objects.

Doing the aforementioned involved gathering functional magnetic resonance imaging data from 6 volunteers who were asked to watch more than 2000 short captioned video clips. Each caption was analyzed by a deep language model to generate a semantic vector that represented important details. These details include actions, objects, relationships, and settings.

A decoder was then trained to map the whole-brain activity of each participant to the generated semantic vectors. Nevertheless, when new data arrived from either viewing or recalling scenes, the system predicted the corresponding semantic vector. A text generator then produced descriptive sentences that matched the decoded meaning as closely as possible.

Notable Findings and Insights

The results demonstrated that neural activity can be linked to surprisingly rich descriptions of what a person sees or remembers. These findings give a clearer view of how meaning is stored across the brain and suggest new opportunities for future communication tools powered by artificial intelligence and noninvasive imaging. Take note of the following:

• Generation of Structured Descriptive Sentences

The system produced full descriptive sentences containing actions, objects, relationships, and contextual details, moving far beyond earlier methods that offered only simple tags. The generated descriptions reflected the layered structure of the original scenes with a level of detail that surprised researchers and observers.

• Successful Decoding of Recalled Mental Content

Note that the decoder worked not only when participants watched videos but also when they recalled them. This demonstrated that the brain uses similar semantic structures during imagination and perception. This also allowed the system to interpret internal mental content without depending on or using visible stimuli.

• Decoding Outside Classical Language Regions

Accurate decoding occurred even when frontal language and temporal language centers were excluded. This suggests that meaning is represented across many brain regions, including higher visual and parietal areas, which challenges the long-standing idea that semantics lives mainly in language-specific networks.

• Superior Performance of Semantic Models

Semantic embeddings produced by deep language models outperformed visual models in higher cortical regions. This reinforced the view that these areas focus more on conceptual meaning and interactions than on colors, shapes, or motion patterns, revealing an important link between cognition and semantic structure.

• Matching of Decoded and True Semantic Vectors

Predicted semantic vectors aligned closely with ground truth vectors. This enabled the identification of viewed clips with accuracy well above chance levels. The system also detected word-order changes, which confirmed that it captured structured meaning instead of functioning as a simple keyword-matching tool.

What Comes Next for Mind Captioning

The findings open the door to new noninvasive communication technologies for individuals who cannot express language through speech or movement. Moreover, although functional magnetic resonance imaging is not yet practical for regular or routine use, the demonstrated pipeline offers an initial blueprint for future thought-to-text models and systems.

It is also worth mentioning that the study expands understanding of how the brain stores meaning. Instead of keeping information in isolated language hubs, the brain distributes semantic details broadly, allowing complex scenes to be rebuilt from dispersed patterns. This insight invites new research into how various regions cooperate during thought.

Functional magnetic resonance imaging still limits real-world applications due to cost, size, and slow data collection. However, the successful mapping between neural signals and semantic vectors provides a clear path for future studies. Hence, as imaging tools improve, similar decoding systems may eventually operate outside laboratory settings.

FURTHER READING AND REFERENCE

- Horikawa, T. 2025. “Mind Captioning: Evolving Descriptive Text of Mental Content From Human Brain Activity.” Science Advances. 11(45). DOI: 1126/sciadv.adw1464